See all blog posts

See all blog posts

Send Log4j 2 logs to Red Hat OpenShift Container Platform EFK stack

When developing applications, you can choose from many logging utility options based on your needs.

One of these logging utilities, Log4j 2, is commonly used for logging in applications.

Log4j 2 has an API that you can use to output log statements to various output targets.

In this blog, we’ll show you how to forward your Log4j 2 logs into Red Hat OpenShift Container Platform’s (RHOCP) EFK (ElasticSearch, Fluentd, Kibana) stack so you can view and analyze them.

We’ll present two approaches to forward Log4j 2 logs using a sidecar container and a third approach to forward Log4j 2 logs to JUL (java.util.logging).

You might want to visualize both your Log4j 2 application logs and Liberty logs on the same Kibana dashboard to better monitor your applications and servers.

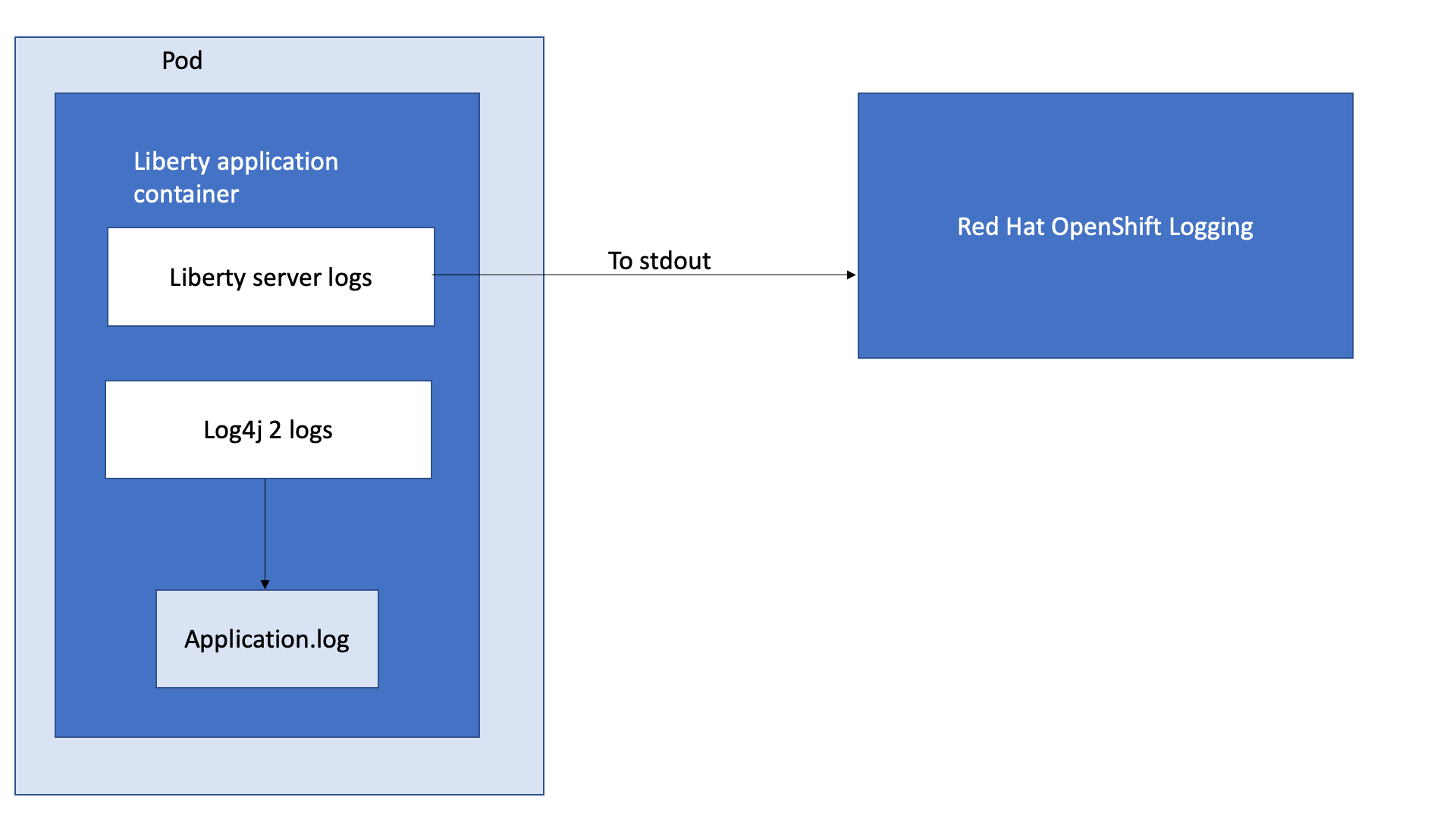

In Open Liberty logging, JUL logs are merged with Liberty logs and sent to messages.log/console, but Log4j 2 logs aren’t merged with Liberty logs.

Log4j 2 logs that are written to the file system aren’t automatically picked up when you run your application in a Docker container in OpenShift.

When you deploy your application on Open Liberty, you must specify where you want to send your Log4j 2 logs.

Sending Log4j 2 logs to RHOCP’s EFK stack using sidecar containers

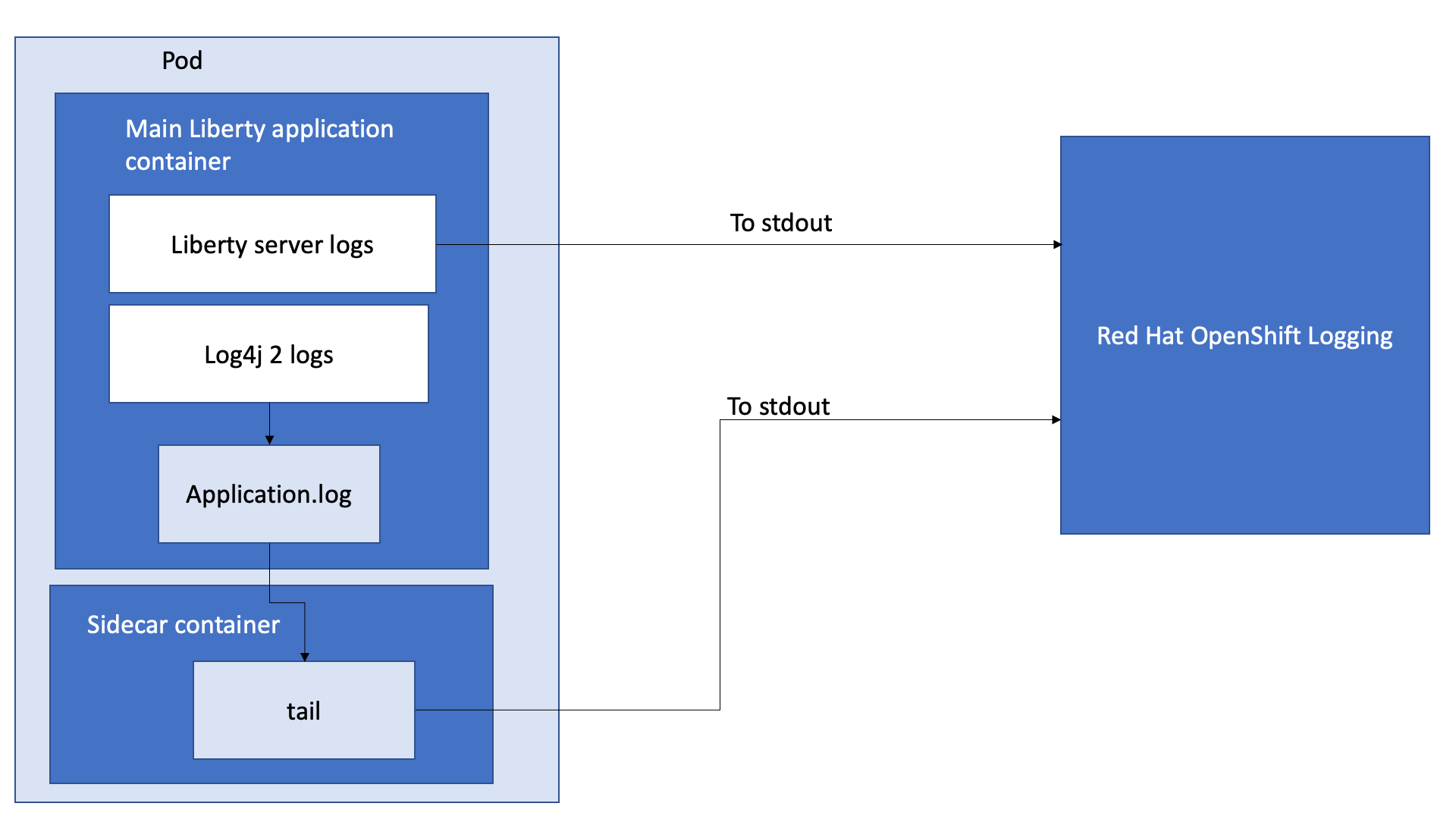

In Kubernetes environments, the best practice is to send your logs to stdout. The platform will be set up to consume content from the pod’s stdout and direct it to the logging facilities of the cluster. One of the ways to send your Log4j 2 logs to RHOCP’s EFK stack is by using sidecar containers. Pods can have multiple containers that work together. While one container creates and writes to a log file, another sidecar container, in the same pod, can be configured to read those files from the first container. In this case, the sidecar container can:

-

Stream application logs to the application’s own stdout.

-

Run a logging agent, which is configured to pick up logs from an application container.

For either option, set up a shared volume for the directory of your application logs:

-

For your deployed application, modify the deployment to define the shared volume:

volumes: - name: outputlog emptyDir: {} -

Mount the volume to your main application container:

volumeMounts: - name: outputlog mountPath: /output/logs

Forward Log4j 2 logs to the application’s stdout

By streaming application logs to its own stdout, the Liberty server logs are separated from the application logs. In Kibana, the logs appear as if they came from the sidecar container of the same pod.

-

Configure a Log4j 2 Appender to send your logs to a File or RollingFile:

<Appenders> <File name="File" fileName="${log-path}/app.log" append="true"> <JsonLayout compact="true" eventEol="true"/> </File> <RollingFile name="DailyRolling" fileName="${log-path}/myexample.log" append="true" filePattern="${log-path}/myexample-%d{yyyy-MM-dd}-%i.log"> <JsonLayout compact="true" eventEol="true"/> <Policies> <TimeBasedTriggeringPolicy interval="1" modulate="true"/> </Policies> </RollingFile> </Appenders> -

Add the Appender as a reference to your Logger:

<Loggers> ... <Logger....> <AppenderRef ref="File"/> <AppenderRef ref="RollingFile"/> </Logger> .... </Loggers>For more information about Log4j 2 Appenders, see the Apache Appender documentation.

-

Modify your application deployment by adding a sidecar container that tails your application logs. Write the log to a persistent volume for storage.

Create another container and mount the volume of the directory where the log is located. This example tails the logs to send them to stdout:

- name: app-sidecar image: 'linyusha/java-microprofile:latest' args: - /bin/sh - '-c' - tail -n+1 --retry -f /output/logs/app.log resources: {} volumeMounts: - name: outputlog mountPath: /output/logs -

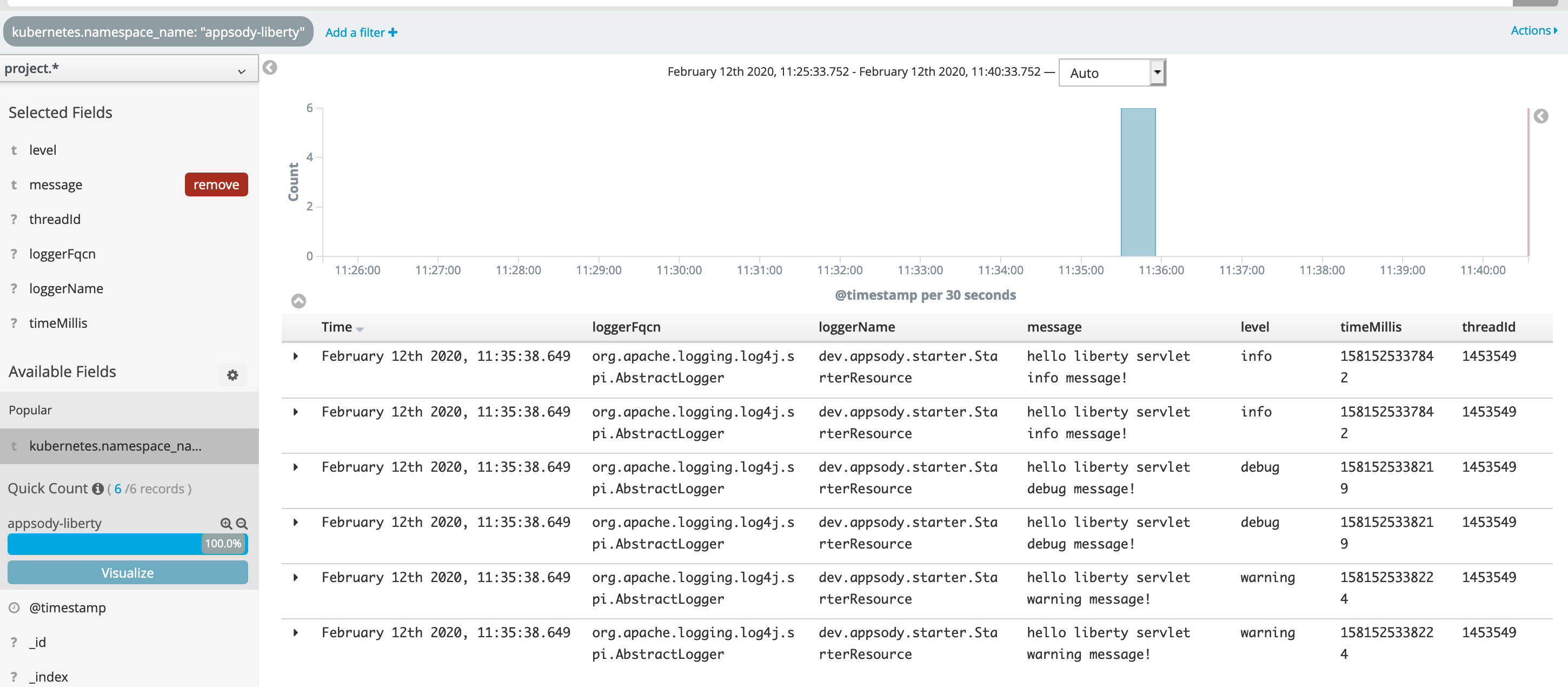

On your Kibana dashboard, you should see the application logs under the project.* index along with your other Liberty server logs:

Forward Log4j 2 logs using a logging agent

You can create a sidecar container with a separate logging agent that’s configured specifically to forward your application’s logs. This gives you the flexibility to use Fluentd to specify where you want to send your Log4j 2 logs. In this case, we are directing our logs to stdout to send them to RHOCP EFK stack.

-

Create a Fluentd config map that specifies the

source(where you want Fluentd to scrape your logs) andmatch(where you want to send the logs):apiVersion: v1 kind: ConfigMap metadata: name: fluentd-config data: fluentd.conf: | <source> @type tail <parse> @type json </parse> path /output/logs/app.log pos_file /path/to/position/file/app.log.pos tag project.* </source> <match **> @type stdout </match> -

Create a sidecar container running Fluentd. The pod mounts a volume where Fluentd can pick up its configuration data. To modify your deployment:

-

Add the

configMapas a volume to your deployment:volumes: - name: outputlog emptyDir: {} - name: config-volume configMap: name: fluentd-config -

Create the sidecar container with Fluentd as the logging agent:

- name: count-agent image: k8s.gcr.io/fluentd-gcp:1.30 env: - name: FLUENTD_ARGS value: -c /etc/fluentd-config/fluentd.conf volumeMounts: - name: outputlog mountPath: /output/log - name: config-volume mountPath: /etc/fluentd-config

-

The following code snippet and sample output apply to both sidecar container scenarios:

-

This code snippet is example log code in an application:

LOGGER.info("hello liberty servlet info message!"); LOGGER.debug("hello liberty servlet debug message!"); LOGGER.log(Level.WARN, "hello liberty servlet warning message!"); -

If you add the preceding code snippet to your application, the following example is a sample output to stdout:

{"timeMillis":1581629336498,"thread":"Default Executor-thread-20","level":"INFO","loggerName":"application.servlet.LibertyServlet","message":"hello liberty servlet info message!","endOfBatch":false,"loggerFqcn":"org.apache.logging.log4j.spi.AbstractLogger","threadId":65,"threadPriority":5} {"timeMillis":1581629336646,"thread":"Default Executor-thread-20","level":"DEBUG","loggerName":"application.servlet.LibertyServlet","message":"hello liberty servlet debug message!","endOfBatch":false,"loggerFqcn":"org.apache.logging.log4j.spi.AbstractLogger","threadId":65,"threadPriority":5} {"timeMillis":1581629336646,"thread":"Default Executor-thread-20","level":"WARN","loggerName":"application.servlet.LibertyServlet","message":"hello liberty servlet warning message!","endOfBatch":false,"loggerFqcn":"org.apache.logging.log4j.spi.AbstractLogger","threadId":65,"threadPriority":5}

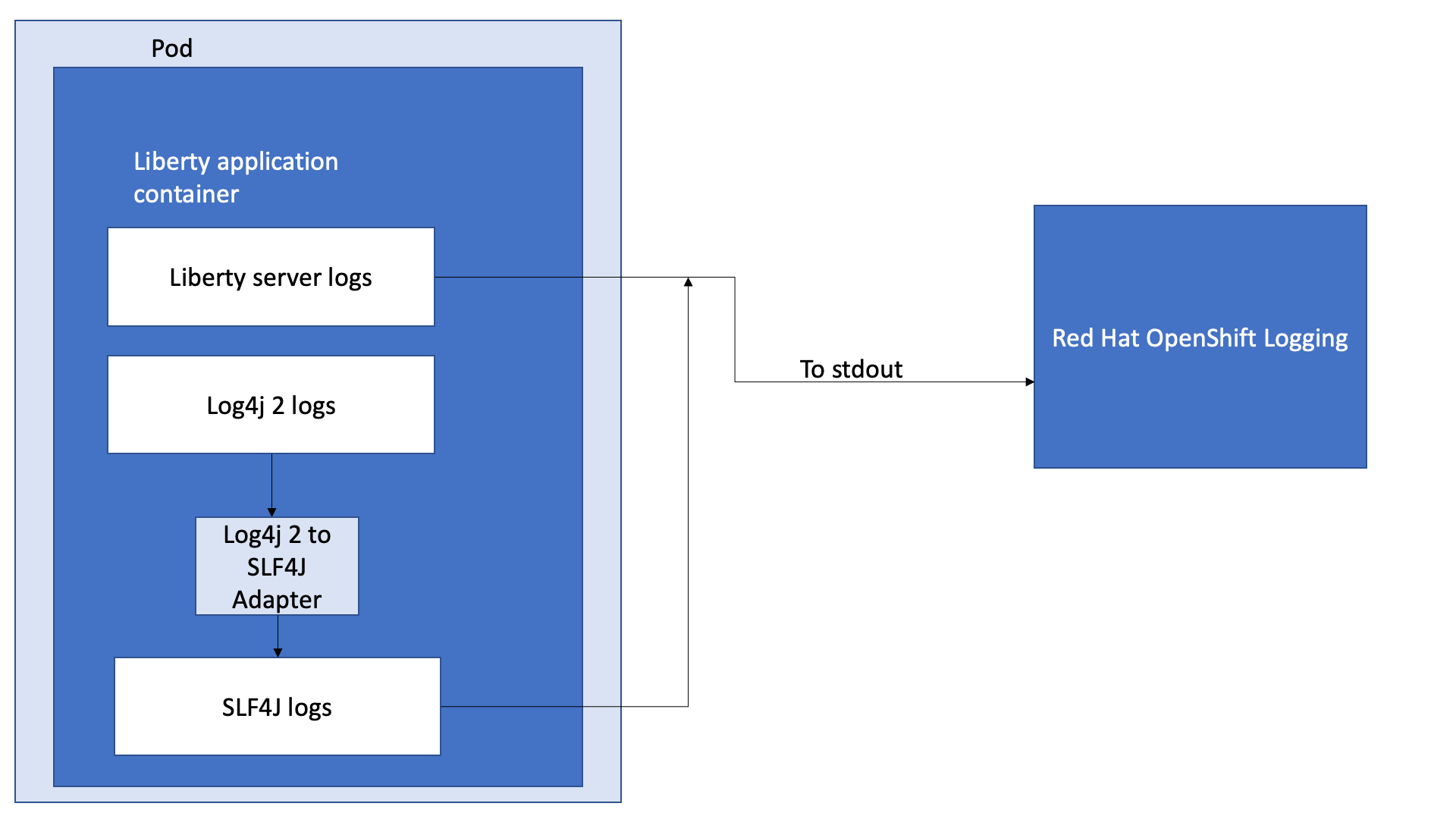

Sending Log4j 2 logs to JUL

In the examples that use sidecar containers, Log4j 2 logs are forwarded to RHOCP, but they aren’t merged with Liberty logs. An alternative way to forward your Log4j 2 logs to RHOCP is by merging your Log4j 2 logs with Liberty logs. To merge these logs, you can use the Log4j 2 to SLF4J Adapter to send Log4j 2 logs to JUL.

Forward Log4j 2 logs using the Log4j 2 to SLF4J Adapter

Another way to direct your Log4j 2 logs to RHOCP’s EFK stack is using the Log4j 2 to SLF4J Adapter. SLF4J can be configured to use JUL as the underlying implementation. The Log4j 2 to SLF4J Adapter allows applications coded to the Log4j 2 API to be routed to SLF4J.

You can use this technique to merge your Log4j 2 logs with Liberty logs. Using this adapter may cause some loss of performance, as the Log4j 2 messages are formatted before they can be passed to SLF4J. After logs are passed to SLF4J, they’re formatted and merged with Liberty logs before being passed to console.log/stdout.

-

To use this adapter, add the dependency to your

pom.xmlfile:<dependency> <groupId>org.apache.logging.log4j</groupId> <artifactId>log4j-to-slf4j</artifactId> <version>2.13.0</version> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-jdk14</artifactId> <version>1.7.7</version> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-api</artifactId> <version>1.7.25</version> </dependency> -

Enable JSON logging in Open Liberty by adding the appropriate environment variables in the

bootstrap.propertiesfile under your server directory:# generate console log in json and route the following sources com.ibm.ws.logging.console.source=message, trace, ffdc, audit, accessLog com.ibm.ws.logging.console.format=json com.ibm.ws.logging.console.log.level=INFO

The following log is an example output:

{"type":"liberty_message","host":"192.168.0.104","ibm_userDir":"\/Users\/[email protected]\/Documents\/archived-guide-log4j\/finish\/target\/liberty\/wlp\/usr\/","ibm_serverName":"log4j.sampleServer","message":"hello liberty servlet info message!","ibm_threadId":"00000035","ibm_datetime":"2020-02-13T11:27:07.789-0500","module":"application.servlet.LibertyServlet","loglevel":"INFO","ibm_methodName":"doGet","ibm_className":"application.servlet.LibertyServlet","ibm_sequence":"1581611227789_0000000000016","ext_thread":"Default Executor-thread-8"}

{"type":"liberty_trace","host":"192.168.0.104","ibm_userDir":"\/Users\/[email protected]\/Documents\/archived-guide-log4j\/finish\/target\/liberty\/wlp\/usr\/","ibm_serverName":"log4j.sampleServer","message":"hello liberty servlet debug message!","ibm_threadId":"00000035","ibm_datetime":"2020-02-13T11:27:07.791-0500","module":"application.servlet.LibertyServlet","loglevel":"FINE","ibm_methodName":"doGet","ibm_className":"application.servlet.LibertyServlet","ibm_sequence":"1581611227791_0000000000001","ext_thread":"Default Executor-thread-8"}

{"type":"liberty_message","host":"192.168.0.104","ibm_userDir":"\/Users\/[email protected]\/Documents\/archived-guide-log4j\/finish\/target\/liberty\/wlp\/usr\/","ibm_serverName":"log4j.sampleServer","message":"hello liberty servlet warning message!","ibm_threadId":"00000035","ibm_datetime":"2020-02-13T11:27:07.792-0500","module":"application.servlet.LibertyServlet","loglevel":"WARNING","ibm_methodName":"doGet","ibm_className":"application.servlet.LibertyServlet","ibm_sequence":"1581611227792_0000000000017","ext_thread":"Default Executor-thread-8"}If you want to learn more about JSON logging with Open Liberty, check out this short YouTube video or this blog post about Adding custom fields to JSON logs in Open Liberty.

Summary

As you’ve learned in this post, there are different ways to forward your Log4j 2 and other non-JUL logs to RHOCP’s EFK stack. You can use a sidecar container to forward logs directly to stdout, or you can run the sidecar container as your logging agent. You can also implement the Log4j 2 to SLF4J Adapter to merge Log4j 2 logs with your Liberty logs and output them in JSON format. For more information about logging in Open Liberty, see the Open Liberty logging documentation. If you want to try out another step-by-step tutorial on logging in EFK, check out this Kabanero guide on Application Logging on RHOCP. Or, if you want to learn about Open Liberty and the ELK (Elasticsearch, Logstash, Kibana) stack, check out this video on Using Liberty with Elastic Stack (aka ELK).